Mar 3, 2023

Blog Digital World How did we get here? A brief history of machine learning

As a subset of artificial intelligence, the term ‘machine learning’ wasn’t coined until 1959, by the computer gaming and AI pioneer Arthur Samuel. In the years since, the concept of AI has rooted deeper into public consciousness, inspiring countless books, films, and television series. These storylines, often eerie, sometimes terrifying, usually deviate far from the concrete ‘a to b’ journey that machine learning has been on. That’s why we’re breaking down the history of machine learning, from its conception up to the present day.

Machine learning: Where did it all begin?

The early origins of machine learning can be traced back to 1949, before it received its name. Donald Hebb’s book The Organization of Behavior outlined the concept of “brain cell interaction”, based on theories of how neurons communicate with each other.

In 1952, Arthur Samuel entered the timeline. As an employee of IBM, Samuel worked on developing a program for playing chess. The program was capable of observing positions in the game by learning a model to give better moves for the machine player, subsequently developing its expertise as it gained more experience. Samuel defined machine learning as the field of study that gives computers an ability without being explicitly programmed.

By 1957, Frank Rosenblatt had proposed the perceptron, a simple neural network unit. This program mimicked the human brain to solve complex problems, installed on a system called “Mark 1 Perceptron” which was constructed for image recognition.

Ten years later in 1967, the first programs that could recognize patterns were designed using an algorithm called “the nearest neighbor”. This was one of the first algorithms used to determine a solution to the traveling salesman problem: "Given a list of cities and the distances between each pair of cities, what is the shortest possible route that visits each city exactly once and returns to the origin city?"

The next two decades saw rapid shifts in the field of neural network research. Scientists strove to establish ML as a separate entity, with the deepening research leading to the development of feedforward neural networks and backpropagation.

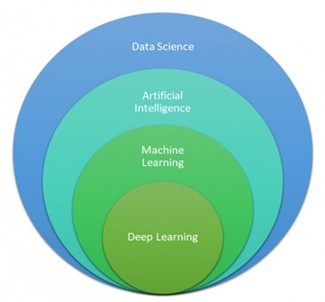

In the 1970s, a breakthrough development occurred. Backpropagation-based artificial neural network (ANN), a primary tool for machine learning, was built. It was used for identifying too-complex data that human programmers were unable to detect. In the period following these revelations, the machine learning industry struggled to distinguish itself from AI. Both technologies were frequently confused. Where ML learns and predicts based on passive observations, AI involves an agent interacting directly with the environment. An AI learns and takes actions to maximize its chance of successfully achieving its goals.

This set machine learning on a different trajectory, one that prioritized data over knowledge. In the early 1990s, ML worked on developing data mining, adaptive software, text learning and language learning. The period also witnessed the development of reinforced learning algorithms, along with programs that could analyze large amounts of data and draw conclusions or learn from the results.

In 1997 Freund and Schapire proposed another key machine-learning model. Known as AdaBoost, this model could create a strong classifier from an ensemble of weak classifiers.

In the present day, machine learning has built upon these historic breakthroughs to serve a huge number of practical uses. Self-driving vehicles, the internet of things, and generative AI chatbots are just a few examples of the way ML has been integrated into contemporary life.

Most current machine-learning development is being done in key areas like quantum computing, better unsupervised algorithms, collaborative learning, deeper personalization and cognitive services.

But what does the future hold? One day, experts expect ML to ultimately bridge the gap between artificial intelligence’s expectation and reality. Machine learning has already become an extremely important tool and a key solution in solving complex classification problems. Its capabilities will only grow.

For more research on machine learning

Download your complimentary report overview of our latest research report on machine learning. We dive into the technologies and markets of the industry, providing researchers and product developers with unrivaled insight into the landscape.

At BCC Research, we publish regular reports within the information technology bracket. If you’d like a broad overview of the IT technology landscape, membership with our research library may be the way forward. Get in touch below to find out more.

Olivia Lowden is a Junior Copywriter at BCC Research, writing content on everything from sustainability to fintech. Before beginning at BCC Research, she received a First-Class Master’s Degree in Creative Writing from the University of East Anglia.

From smartphones to satellites, antennas play a vital role in enabling the seaml...

Introduction Artificial Intelligence (AI) and the Internet of Things (IoT) are r...

We are your trusted research partner, providing actionable insights and custom consulting across life sciences, advanced materials, and technology. Allow BCC Research to nurture your smartest business decisions today, tomorrow, and beyond.

Contact UsBCC Research provides objective, unbiased measurement and assessment of market opportunities with detailed market research reports. Our experienced industry analysts assess growth opportunities, market sizing, technologies, applications, supply chains and companies with the singular goal of helping you make informed business decisions, free of noise and hype.